The Avatar CGI light refraction underwater sequences in James Cameron’s “Avatar: The Way of Water” represent one of the most ambitious technical achievements in visual effects history. When the film premiered in December 2022, audiences witnessed something that had never been accomplished before: photorealistic computer-generated underwater environments where light behaved exactly as it does in the physical world. This was not simply a matter of making water look wet. The challenge required Weta FX to fundamentally rethink how digital light interacts with virtual water, creating proprietary rendering systems that could simulate the complex physics of photons bending, scattering, and absorbing as they pass through oceanic environments. The importance of accurate light refraction in underwater CGI extends far beyond technical showmanship. Water fundamentally changes how we perceive the world beneath its surface.

Objects appear displaced from their actual positions. Colors shift toward blue-green as depth increases. Caustic patterns dance across the seafloor as sunlight filters through waves above. Any deviation from these expected behaviors immediately registers as artificial to the human eye, which has evolved to understand light behavior instinctively. For a film that asked audiences to spend more than an hour of its runtime beneath the waves of an alien ocean, getting these details wrong would have shattered the immersive experience Cameron spent over a decade developing. By reading this analysis, you will understand the specific technical innovations that made Avatar’s underwater sequences possible, the physics principles that governed their creation, and why this achievement matters for the future of digital filmmaking. The techniques developed for this production have already begun influencing visual effects pipelines across the industry, establishing new standards for aquatic CGI that will define underwater cinematography for years to come.

Table of Contents

- How Does Light Refraction Work in Avatar’s Underwater CGI Sequences?

- The Physics of Underwater Light Behavior in Digital Environments

- Caustics and Dappled Light in Avatar’s Ocean Rendering

- Rendering Techniques for Realistic Underwater Light in Film

- Common Challenges in CGI Underwater Light Refraction

- The Influence of Real Underwater Photography on Digital Recreation

- How to Prepare

- How to Apply This

- Expert Tips

- Conclusion

- Frequently Asked Questions

How Does Light Refraction Work in Avatar’s Underwater CGI Sequences?

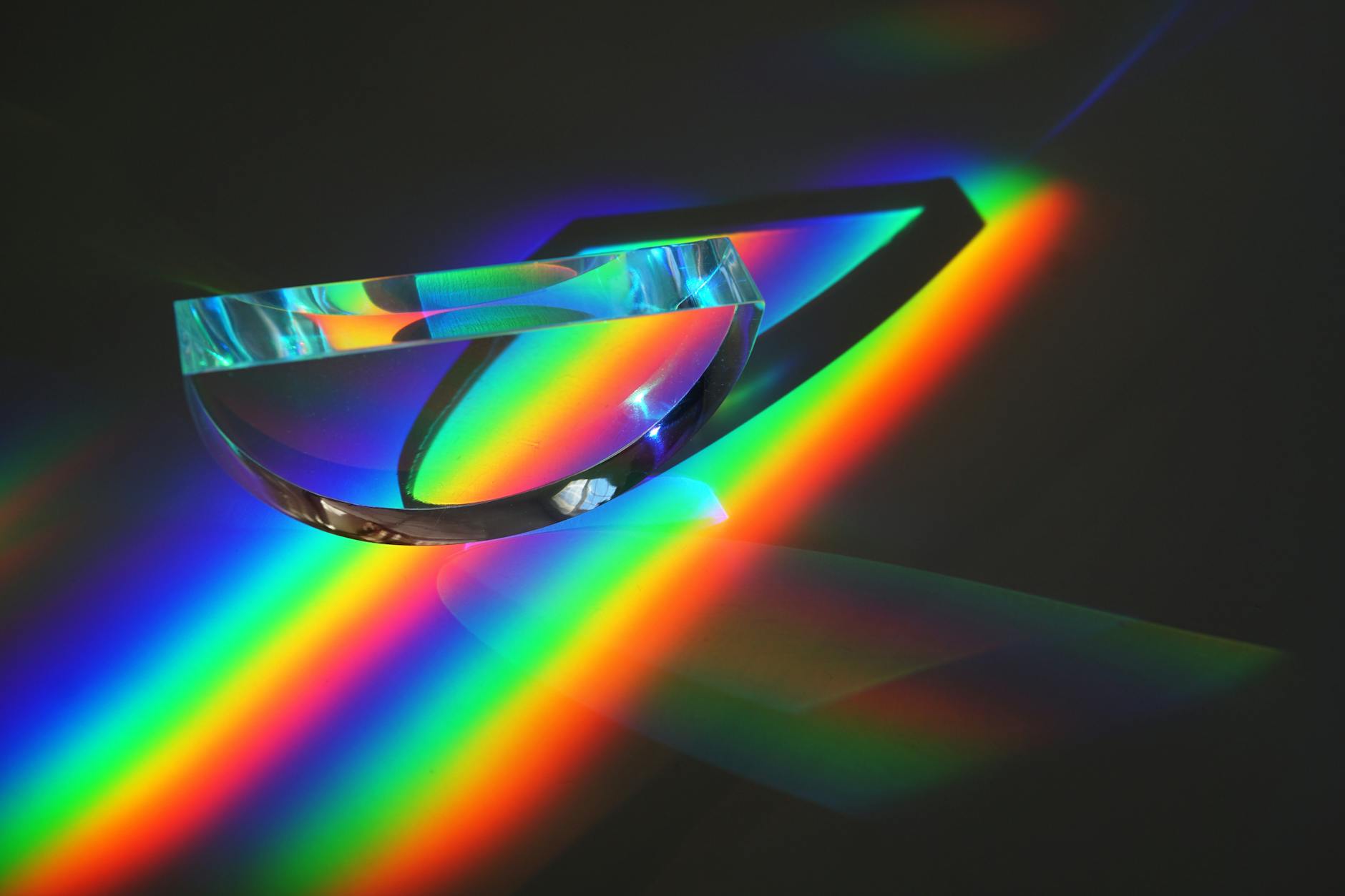

Light refraction occurs when photons pass from one medium into another with a different optical density, causing them to change direction. In the case of water, light traveling from air bends toward the perpendicular as it enters the denser medium, then bends away when exiting back into air. This phenomenon, governed by Snell’s Law, creates the familiar visual distortions we associate with looking into pools, aquariums, and oceans. For Avatar: The Way of Water, Weta FX needed to simulate this behavior across billions of individual light rays interacting with constantly shifting water surfaces, suspended particles, and the bodies of swimming characters.

The rendering system developed for the film employed a technique called spectral path tracing, which calculates the journey of individual light wavelengths rather than treating white light as a single entity. This distinction matters because different wavelengths refract at slightly different angles, a phenomenon called chromatic dispersion. When white light enters water at an angle, red wavelengths bend less than violet wavelengths, creating subtle color separation at object edges. Traditional CGI rendering often ignores this effect for computational efficiency, but its absence contributes to the artificial quality of many underwater visual effects. Avatar’s system calculated these wavelength-specific refractions, producing the authentic color fringing visible around characters and objects throughout the underwater sequences.

- **Snell’s Law implementation**: The rendering engine calculated refraction angles based on accurate refractive indices for seawater, accounting for salinity and temperature variations at different depths

- **Wavelength-dependent refraction**: Red, green, and blue light components were traced separately, creating authentic chromatic aberration at water-air boundaries

- **Dynamic surface interaction**: Light refraction calculations updated in real-time with the animated water surface, producing constantly shifting caustic patterns

The Physics of Underwater Light Behavior in Digital Environments

Beyond simple refraction, underwater light behavior involves absorption, scattering, and the complex interplay between these phenomena. Water absorbs longer wavelengths more rapidly than shorter ones, which is why the ocean appears blue and why objects lose their red coloration as depth increases. At 10 meters, red light has largely been filtered out. By 25 meters, orange follows. This selective absorption creates the characteristic blue-green palette of underwater photography and must be accurately simulated for CGI to appear convincing. Weta FX implemented volumetric absorption calculations throughout their underwater environments, tracking how much of each wavelength survived as light traveled through the virtual water. This was not a simple post-process color filter applied to finished images.

Instead, the absorption was calculated per-ray, meaning light that traveled a longer path through water would appear more blue-shifted than light taking a direct route. This produced accurate color gradients where the tops of objects appeared warmer than their undersides, matching real underwater photography. Scattering presented its own challenges. Water contains countless microscopic particles, from plankton to sediment, that redirect light as it passes. This scattering creates the hazy, atmospheric quality of underwater environments, reducing visibility and softening shadows. The avatar team developed particle simulation systems that populated virtual water with billions of suspended elements, each capable of redirecting light rays. The density and distribution of these particles varied with depth, proximity to the seafloor, and environmental conditions, creating naturalistic variations in water clarity throughout different scenes.

- **Depth-dependent color absorption**: Each meter of simulated water depth removed calculated percentages of longer wavelengths, matching real-world absorption coefficients

- **Volumetric scattering**: Suspended particle systems created authentic underwater haze without relying on post-production fog effects

- **Multiple scattering calculations**: Light rays could scatter multiple times before reaching the camera, producing soft, diffuse illumination characteristic of underwater environments

Caustics and Dappled Light in Avatar’s Ocean Rendering

Caustics, the rippling patterns of focused light that dance across underwater surfaces, represent one of the most visually distinctive aspects of underwater environments. These patterns form when the curved surface of water acts as a dynamic lens, focusing and defocusing sunlight as waves pass overhead. The mathematical complexity of calculating accurate caustics has historically made them one of the most challenging aspects of underwater CGI. Most films rely on projected texture patterns that move in predefined loops, a shortcut that works for brief shots but becomes noticeable over extended underwater sequences. For Avatar: The Way of Water, caustics were calculated through actual light simulation rather than texture projection. The rendering system traced photons from the virtual sun through the animated water surface, calculating how each ray bent upon entering the water and where it ultimately landed. Millions of these rays, aggregated together, produced caustic patterns that organically responded to wave motion above.

When characters swam near the surface, the caustic patterns on their bodies shifted in perfect synchronization with the overhead waves. When the sea grew calm, the patterns became more stable and focused. During storms, they fragmented into chaotic, rapidly shifting configurations. This physically accurate approach extended to secondary caustics as well. Light reflecting off the seafloor could create secondary caustic patterns on the undersides of floating objects. Light passing through transparent creatures produced focused bright spots behind them. These subtle effects, rarely seen in CGI because of their computational expense, added layers of authenticity that registered subconsciously with audiences even when not consciously noticed.

- **Wave-synchronized caustics**: Caustic patterns were directly computed from water surface animation rather than using pre-rendered texture loops

- **Multiple-bounce illumination**: Light could reflect off surfaces and create secondary caustic effects throughout the scene

- **Temporal coherence**: Caustic movements remained smooth and physically plausible across frames, avoiding the flickering common in less sophisticated implementations

Rendering Techniques for Realistic Underwater Light in Film

The practical implementation of these lighting systems required significant departures from standard visual effects workflows. Traditional film rendering often separates different lighting contributions into layers that compositors can adjust independently. Direct light, indirect bounces, reflections, and refractions might each render separately, then combine in post-production. This approach offers flexibility but can introduce inconsistencies when the separated elements are recombined, particularly for complex optical phenomena like underwater refraction. Avatar’s pipeline instead emphasized what the team called “physical plausibility” over compositional flexibility.

Lighting effects that would traditionally render separately were calculated together in unified passes, ensuring that refractions, caustics, and scattering all interacted correctly. This required substantially more computational resources per frame but eliminated the visual artifacts that often result from combining separately rendered optical effects. The trade-off was worthwhile for extended underwater sequences where audiences had ample time to notice any inconsistencies. Render times for complex underwater shots reportedly extended to over 100 hours per frame during production, necessitating the use of Weta’s massive render farm alongside cloud computing resources. The studio processed over 3,200 terabytes of data for the film’s visual effects, with underwater sequences consuming a disproportionate share of that storage. These requirements pushed the boundaries of what was practically achievable with contemporary hardware, and several optimizations developed for the film have since been integrated into commercial rendering software.

- **Unified optical rendering**: Refraction, reflection, and scattering calculated together rather than separated into compositable layers

- **Adaptive ray sampling**: More computational resources were allocated to complex optical regions while simpler areas used fewer samples

- **Machine learning denoising**: AI systems cleaned up statistical noise from ray-traced images without removing legitimate optical detail

Common Challenges in CGI Underwater Light Refraction

One persistent challenge in underwater CGI involves the interface between water and air, particularly at the surface. When a camera looks up through water at the sky, light behaves differently depending on the viewing angle. Below a critical angle, the surface becomes a perfect mirror, reflecting the underwater environment rather than transmitting the sky above. This phenomenon, called total internal reflection, creates the shimmering, mirror-like quality visible when looking up from underwater. Simulating this correctly requires the rendering system to understand when light will transmit through the surface and when it will reflect, then blend these behaviors smoothly across the transition zone. Avatar’s underwater sequences frequently positioned cameras looking upward, showcasing the Metkayina clan’s swimming abilities against the backdrop of the filtered sky. Getting the surface behavior correct in these shots was essential.

The rendering system calculated Fresnel equations for each ray intersecting the water surface, determining the ratio of reflected to transmitted light based on angle and polarization. Rays at shallow angles contributed more reflection, while those approaching perpendicular provided more sky transmission. The blended result matched the authentic appearance of real underwater photography looking toward the surface. Another significant challenge involved interaction between characters and water. When Na’vi moved through the ocean, their bodies displaced water, created bubbles, and disrupted light patterns. Hair and clothing moved differently underwater than in air, and the light filtering through these elements required different calculations than solid surfaces. The subsurface scattering that makes skin appear lifelike behaves differently underwater because the surrounding medium is already dense. Weta developed specialized shaders that adjusted subsurface calculations based on whether characters were submerged, emerging from water, or transitioning between states.

- **Total internal reflection simulation**: Camera angles looking upward through water correctly showed the sky-to-mirror transition at appropriate angles

- **Fresnel blending**: Surface interactions properly balanced reflection and transmission based on viewing geometry

- **Subsurface scattering adjustments**: Character skin rendering modified its calculations to account for the denser underwater medium

The Influence of Real Underwater Photography on Digital Recreation

James Cameron’s background as a deep-sea explorer directly influenced how Avatar’s underwater sequences were conceived and executed. Before production began, the team assembled extensive reference libraries of actual underwater footage, studying how light behaved in various oceanic conditions. Cameraman Russell Carpenter, who served as director of photography, worked with Cameron to establish visual benchmarks drawn from real underwater cinematography. These references informed every aspect of the digital recreation, from macro details like light caustic intensity to broader concerns like how visibility changed with depth and weather conditions.

The production also conducted original underwater photography sessions specifically designed to capture reference data. High-dynamic-range cameras recorded how bright caustics could become relative to shadowed areas. Color-calibrated charts were photographed at various depths to establish accurate absorption curves. Divers were filmed performing motions similar to planned character actions, providing reference for how real water responded to body movement. This empirical data supplemented the physics-based calculations, allowing artists to validate their simulations against documented reality.

How to Prepare

- **Study basic optics principles**: Familiarize yourself with Snell’s Law, which describes how light bends when entering different mediums. Understanding that the refractive index of water is approximately 1.33 relative to air’s 1.0 provides context for refraction calculations. This ratio determines that light bends toward the perpendicular when entering water at any angle other than straight down.

- **Learn about light absorption in water**: Research how different wavelengths are absorbed at different rates as light travels through water. Red light diminishes after roughly 5 meters, while blue light can penetrate beyond 200 meters. This wavelength-dependent absorption creates the characteristic blue coloration of deep water scenes.

- **Examine real underwater photography**: Spend time studying actual underwater footage, paying attention to caustic patterns, color shifts, visibility reduction, and surface reflections. Training your eye to recognize authentic underwater lighting makes it easier to appreciate what CGI systems must simulate.

- **Understand path tracing fundamentals**: Path tracing simulates light by tracing rays from the camera back to light sources, calculating interactions along the way. This technique naturally handles complex phenomena like refraction because it models actual light behavior rather than using approximations.

- **Review production materials**: Weta FX and other studios have released behind-the-scenes documentation about their underwater rendering systems. These technical breakdowns provide valuable insight into specific implementation details and the artistic decisions that guided technical development.

How to Apply This

- **Compare CGI to reference footage**: When watching Avatar’s underwater sequences, mentally compare what you see against your knowledge of real underwater photography. Notice how colors shift with depth, how caustic intensity changes with wave activity, and how visibility decreases toward the horizon.

- **Observe surface interactions**: Pay attention to scenes where characters break the water surface. Watch how light behavior changes as the camera passes through the air-water boundary, and notice the distortion visible through the rippling surface when looking upward.

- **Analyze caustic behavior**: Watch how caustic patterns move across characters and environments. In authentic simulation, these patterns should synchronize with visible wave motion and should fragment or coalesce as water surface conditions change.

- **Evaluate color consistency**: Notice whether underwater color grading respects physical absorption curves. Objects nearer the surface should retain more of their warm coloration, while those at depth should shift toward blue-green. Characters swimming between depths should show gradual color transitions.

Expert Tips

- **Watch extended underwater sequences multiple times**: The first viewing typically focuses on narrative content. Subsequent viewings allow you to observe technical details like how bubbles interact with light, how bioluminescence affects surrounding water color, and how particle scattering creates depth atmosphere.

- **Pay attention to eye adaptation**: Real underwater vision involves eye adaptation to lower light levels. Well-designed underwater CGI subtly adjusts exposure and contrast as characters move between bright surface areas and darker depths, mimicking how real vision would respond.

- **Notice edge behavior**: Refraction at object edges, particularly where transparent elements like fins or membranes appear, reveals the sophistication of the optical simulation. Chromatic aberration at these boundaries indicates wavelength-separated ray tracing.

- **Consider computational constraints**: Understanding that each frame of complex underwater footage might require 100+ hours of rendering helps contextualize the technical achievement. These sequences represent not just artistic vision but massive infrastructure investment in rendering capability.

- **Compare to earlier underwater CGI**: Watching underwater sequences from films made 10 or 20 years ago highlights how dramatically the technology has advanced. Earlier films relied on post-process color grading and projected caustic textures that appear crude by contemporary standards.

Conclusion

The underwater light refraction and rendering systems developed for Avatar: The Way of Water represent a genuine inflection point in visual effects capability. What Cameron and Weta FX achieved was not incremental improvement over previous underwater CGI but a fundamental reconception of how digital light could interact with virtual water. By committing to physically accurate simulation rather than traditional visual effects shortcuts, the production established new expectations for what audiences will accept as believable aquatic environments in film. These techniques are not locked away as proprietary secrets.

Many of the rendering innovations developed for Avatar have already begun appearing in commercial software and competing studio pipelines. The spectral rendering approaches, volumetric water simulation methods, and physically based caustic calculations are informing projects across the industry. For filmmakers and visual effects artists interested in underwater sequences, studying Avatar’s achievements provides both inspiration and practical insight into techniques that will define aquatic CGI for the foreseeable future. The ocean of Pandora may be fictional, but the light that illuminates it follows the same physics that governs our own seas, finally rendered with the accuracy those physics deserve.

Frequently Asked Questions

How long does it typically take to see results?

Results vary depending on individual circumstances, but most people begin to see meaningful progress within 4-8 weeks of consistent effort.

Is this approach suitable for beginners?

Yes, this approach works well for beginners when implemented gradually. Starting with the fundamentals leads to better long-term results.

What are the most common mistakes to avoid?

The most common mistakes include rushing the process, skipping foundational steps, and failing to track progress.

How can I measure my progress effectively?

Set specific, measurable goals at the outset and track relevant metrics regularly. Keep a journal to document your journey.